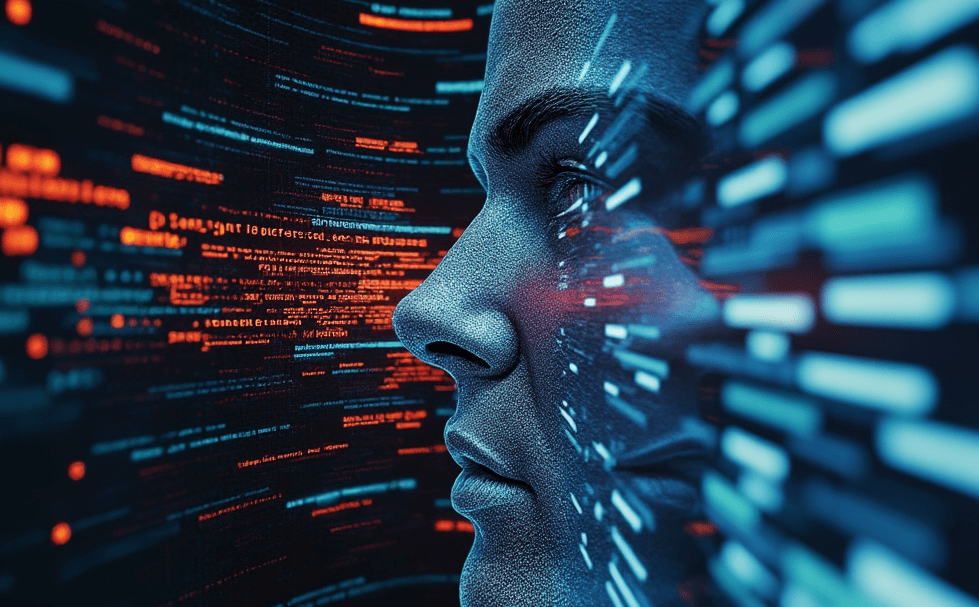

As generative AI continues to evolve rapidly, its ability to produce convincing deepfakes and disinformation is outpacing the growth of media literacy. This widening gap is leaving many Australians vulnerable to manipulation, according to new research from Western Sydney University. The findings, highlighted in the Digital News Report: Australia 2024, reveal a troubling disconnect between the sophistication of AI-generated content and the public’s ability to critically assess it, potentially deepening social divisions across the country.

The Trust Deficit: A Symptom of Disinformation

The report paints a stark picture of declining trust in news media among Australians. Only 26% of the population expresses trust in news overall, while trust in news sourced from social media is even lower, at a mere 18%. This erosion of trust is largely attributed to the increasing prevalence of disinformation on digital platforms. Despite the growing awareness of these issues, the report finds that the ability to critically evaluate media content has not seen significant improvement in recent years.

Generative AI: Complicating the Media Landscape

The rapid advancement of generative AI tools has added a new layer of complexity to media literacy. Associate Professor Tanya Notley from Western Sydney University explains that the sophistication of AI-generated content makes it increasingly difficult for the average person to identify manipulated material. “AI certainly makes media literacy more complicated because the expectation is that it’s getting harder and harder to identify where AI has been used,” Notley said. “It’s going to be used in more sophisticated ways to manipulate people with disinformation, and we can already see that happening.”

The Need for Regulation and Education

Combatting the risks posed by generative AI requires a multifaceted approach, including regulation and education. While there has been some progress in regulatory efforts—such as the U.S. Senate’s recent bill to outlaw pornographic deepfakes—Notley emphasizes that education is equally crucial. “There is a growing concern about a new social division emerging over who can develop the ability to become literate with AI-generated material,” she noted. This divide could exacerbate existing inequalities, particularly between younger, digitally-savvy individuals and older generations or those in lower socio-economic environments.

Generational and Socio-Economic Gaps in Media Literacy

The research highlights a concerning trend: younger Australians, particularly those aged 18-29, tend to have higher media literacy skills, especially if they are engaged in higher education or work in digital-focused jobs. In contrast, older generations, individuals with lower levels of education, and those from disadvantaged socio-economic backgrounds are significantly less likely to develop the necessary skills to navigate the digital landscape effectively.

Notley expressed alarm over the potential implications of this growing divide. “I’m concerned with the implications of a growing gap between those who are equipped to navigate the digital landscape and those who are not,” she said. This divide is particularly worrisome given Australia’s lack of a national strategy to address media literacy comprehensively.

The Call for a National Media Literacy Strategy

Australia’s lagging response to the need for enhanced media literacy is becoming increasingly apparent. “Australia is one of a few laggard advanced democracies now that has no national strategy,” Notley pointed out. She argues that a national strategy for media literacy would provide clear targets and necessary funding to improve these essential skills across the population.

The report suggests that media literacy initiatives must be more accessible and engaging, particularly for adults who are at greater risk of falling behind. Notley recommends that online platforms, where misinformation is most prevalent, play a proactive role in promoting media literacy. Additionally, public cultural institutions like national libraries and public broadcasters could be leveraged to reach a broader audience and foster greater trust in media literacy programs.

The rise of generative AI presents both opportunities and significant challenges for society. As AI-generated content becomes more sophisticated, the need for robust media literacy has never been greater. The findings from the Digital News Report: Australia 2024 underscore the urgent need for a national strategy to address this growing gap, ensuring that all Australians, regardless of age or socio-economic status, are equipped to critically assess the media they consume. Without swift action, the divide between the media-literate and the media-vulnerable may continue to widen, leaving many at risk in an increasingly digital world.